#1: Have some!

…

Best practices for documentation

Related Post

Maintenance and Non-OEM sparesMaintenance and Non-OEM spares

So I need to replace the battery on my trusty vacuum bot iRobot Roomba 780. Judging from my purchase history, I have had the robot for approx. 18 months when I replaced the old original battery with a knock-off spare claiming 50% more capacity (4500mAh vs 3000mAh on the original pack).

By now, 25 more months have passed and I need to get another replacement. Just looking at the timeline, there is precious little reason to even think about getting the original part when the knockoff lasted longer than the original one.

So in case anyone is wondering, here’s what I’m getting:

-Jan

Issues preparing for upgrade to SCVMM 1801Issues preparing for upgrade to SCVMM 1801

I just ran into a small hickup upgrading my System Center Virtual Machine Manger 2016 to the new version 1801. I wasn’t able to find any documented cases of anyone running into this so here we go:

The upgrade to SCVMM 1801 actually requires uninstalling SCVMM 2016. This failed on my installation just at the point where I was clicking on the “Remove features” button in the setup dialog.

In C:\ProgramData\VMMLogs\SetupWizard.log I found the following entry:

11:52:28:Uncaught Exception: Threw Exception.Type: Microsoft.VirtualManager.Utils.CarmineException, Exception.Message: Unable to detect cluster configuration of the node. Ensure that the user has permissions to detect cluster node configuration. 11:52:28:StackTrace: at Microsoft.VirtualManager.Setup.ClusterServiceHelper.get_IsAClusterNode() at Microsoft.VirtualManager.Setup.AddRemoveComponentsPage.EnterPage() at Microsoft.VirtualManager.SetupFramework.PageNavigation.WaitEnterSet(Page page) at Microsoft.VirtualManager.Setup.AddRemovePage.RemoveComponent_Click(Object sender, RoutedEventArgs e) at System.Windows.EventRoute.InvokeHandlersImpl(Object source, RoutedEventArgs args, Boolean reRaised) at System.Windows.UIElement.RaiseEventImpl(DependencyObject sender, RoutedEventArgs args) at System.Windows.Controls.Primitives.ButtonBase.OnClick() at System.Windows.Controls.Button.OnClick() at System.Windows.Controls.Primitives.ButtonBase.OnMouseLeftButtonUp(MouseButtonEventArgs e) at System.Windows.RoutedEventArgs.InvokeHandler(Delegate handler, Object target) at System.Windows.RoutedEventHandlerInfo.InvokeHandler(Object target, RoutedEventArgs routedEventArgs) at System.Windows.EventRoute.InvokeHandlersImpl(Object source, RoutedEventArgs args, Boolean reRaised) at System.Windows.UIElement.ReRaiseEventAs(DependencyObject sender, RoutedEventArgs args, RoutedEvent newEvent) at System.Windows.UIElement.OnMouseUpThunk(Object sender, MouseButtonEventArgs e) at System.Windows.RoutedEventArgs.InvokeHandler(Delegate handler, Object target) at System.Windows.RoutedEventHandlerInfo.InvokeHandler(Object target, RoutedEventArgs routedEventArgs) at System.Windows.EventRoute.InvokeHandlersImpl(Object source, RoutedEventArgs args, Boolean reRaised) at System.Windows.UIElement.RaiseEventImpl(DependencyObject sender, RoutedEventArgs args) at System.Windows.UIElement.RaiseTrustedEvent(RoutedEventArgs args) at System.Windows.Input.InputManager.ProcessStagingArea() at System.Windows.Input.InputManager.ProcessInput(InputEventArgs input) at System.Windows.Input.InputProviderSite.ReportInput(InputReport inputReport) at System.Windows.Interop.HwndMouseInputProvider.ReportInput(IntPtr hwnd, InputMode mode, Int32 timestamp, RawMouseActions actions, Int32 x, Int32 y, Int32 wheel) at System.Windows.Interop.HwndMouseInputProvider.FilterMessage(IntPtr hwnd, WindowMessage msg, IntPtr wParam, IntPtr lParam, Boolean& handled) at System.Windows.Interop.HwndSource.InputFilterMessage(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, Boolean& handled) at MS.Win32.HwndWrapper.WndProc(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, Boolean& handled) at MS.Win32.HwndSubclass.DispatcherCallbackOperation(Object o) at System.Windows.Threading.ExceptionWrapper.InternalRealCall(Delegate callback, Object args, Int32 numArgs) at System.Windows.Threading.ExceptionWrapper.TryCatchWhen(Object source, Delegate callback, Object args, Int32 numArgs, Delegate catchHandler) at System.Windows.Threading.Dispatcher.LegacyInvokeImpl(DispatcherPriority priority, TimeSpan timeout, Delegate method, Object args, Int32 numArgs) at MS.Win32.HwndSubclass.SubclassWndProc(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam) at MS.Win32.UnsafeNativeMethods.DispatchMessage(MSG& msg) at System.Windows.Threading.Dispatcher.PushFrameImpl(DispatcherFrame frame) at System.Windows.Application.RunDispatcher(Object ignore) at System.Windows.Application.RunInternal(Window window) at Microsoft.VirtualManager.Setup.Program.UiRun() at Microsoft.VirtualManager.Setup.Program.Main()

So what this means is that the setup is unsuccessfully trying to determine whether SCVMM is installed in a clustered setup. Which mine is not. Has never been.

The solution is to actually *install* the Windows Feature Failover Clustering. This way the check can run and setup will continue. There is no need to actually configure clustering.

Case of the missing Outlook SSOCase of the missing Outlook SSO

If you’re in IT you certainly know this scenario: There’s something in your environment you just can’t get to work the way you want, you’ve spent countless hours reading documentation, googled community forums and fiddled endlessly with settings in order to make it work. It’s not a showstopper and there is a workaround or even just a single prompt that only shows up every once in a while so you can’t justify powering through the issue until it is done, but you return to it every so often. Then it happens, you’re on a completely unrelated thing and reading through docs or configuring settings and then you stumble upon it, a small textbox that explains your issue and how to fix it.

This just happened to me. I’ve been trying to get SSO (Single Sign On) to work for our corporate PCs but haven’t had any luck whatsoever in years of trying. No matter what I did, we always still got prompted to enter credentials for Outlook and Skype for Business. Today I learned why:

Like many organizations, we’re using Office 365. We also use AzureAD Connect to sync our on-premises Active Directory to the Microsoft Cloud and have set it to enable SSO for our users – which works really well for everything but Outlook. The issue of logging in to Skype for Business went away when we migrated to the newer Teams client but Outlook remained a thorn in my side – one I just couldn’t get myself to figure out.

Lately, we have been trying to roll out Microsoft’s Security Defaults for our AzureAD environment and activating Multi-Factor Authentication (MFA) for all our users. The issue I ran into here was that when I enabled the AzureAD Security Defaults, none of my users were able to log in to Outlook on their PCs anymore. Searching through docs for this put me on the right track: For some reason, our Outlook installs were using Legacy Authentication. Some Google-Fu put me on to registry keys I was supposed to add to Enable modern authentication in Outlook – to no avail.

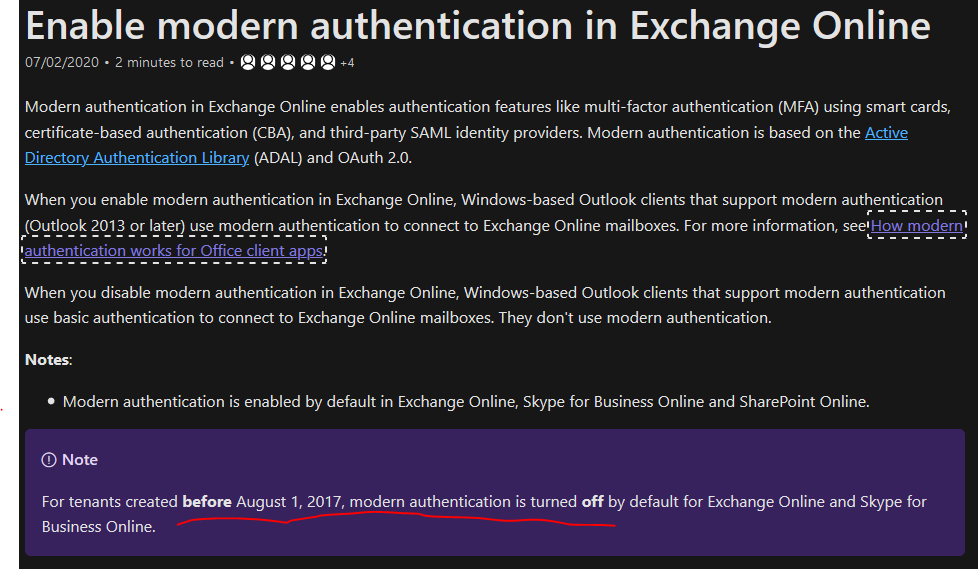

Careful reading of the docs however put me on to the source of my pain:

As we’ve been using Office 365 for a long time, this was us! Mind you, all documentation available on the web is (of course…) written agains the current defaults, which have modern authentication turned on.

Quickly jumping into Powershell solves this:

$UserCredential = Get-Credential

$Session = New-PSSession -ConfigurationName Microsoft.Exchange -ConnectionUri https://outlook.office365.com/powershell-liveid/ -Credential $UserCredential -Authentication Basic -AllowRedirection

Import-PSSession $Session -DisableNameChecking

Set-OrganizationConfig -OAuth2ClientProfileEnabled $true

Remove-PSSession $Session

That’s it. Once I set this, Single Sign On to Outlook just worked. MFA just worked. And I have one less thing on the to-do list that I’ve been visiting over and over for years.

-Jan